The question then arises – is there any way to influence my sensations to get overall better feelings?

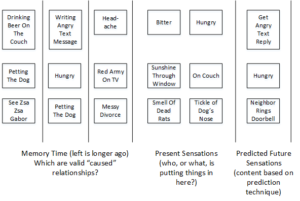

I will start the analysis by examining the end state in greater detail. Since the valuation function previously described is apparently somehow related to the sensations in my memory, this would imply the following possibilities for improving my value function:

- Remove bad sensations

- Add good sensations

- Change bad sensations to good ones (or at least improve them)

Note there is an assumption being made here that what I see in memory is actually related to the value sensations in this way. Since correlation is not “causation” (what that means will be called into further question later on), the two could theoretically be independent. However, because only what I can perceive in my consciousness is all I’ve got to work with, a constructive interpretation of the future value-maximization hypothesis, meaning there is actually a way to improve the current state, and that the mere act of seriously considering it does not put you in a dead-end state from which it is impossible to recover back to the original, suggests that we can use this data. (Note: the fact that the term future is used here does not exclude the possibility of modifying/removing memories which bring suboptimal value.)

Now, by the metaphysical definition of my future sensation set being possibly different from my present one, there is no evidence that the constructive interpretation is valid beyond the limits of my present perception. To have such evidence would suggest that I really did know the future, which I don’t believe I know for sure at the present time. Any assumption about the hypothesis that value can be maximized therefore stems from my memory-time sensations and their relationship, with the assumption that this has some relationship to the future, and that the knowledge of this relationship in turn is a good predictor of future value. In other words, given the metaphysical primitives previously outlined, the assumption that future value can be maximized at least partially, and maybe fully, consists of the assumption that my knowledge processes can discover a value-maximizing relationship.

Why choose correlation (and negative correlation, etc.) as the first/most immediate prediction primitive, and not some other relationship among the sensations? The above hypothesis, which operates on subsets of my sensations with knowledge processes, is, restated, the general, non-constructive definition of correlation with subsets of sensation set and the value sensations associated with the future states as the two factors related in the correlation. Thus, the validity of some rough concept of correlation logically results from the assumption of the hypothesis that future value can be maximized. Simple correlation also happens to be an extremely good predictor for many classes of sensations, namely those associated with “non-sentient” phenomena in my conscious knowledge base. Further, I know that certain sensations, in particular present-time sensations as encoded in my memory as memory-time sensations, are very strongly correlated with value sensations.

Based on memory history previously outlined, removing and changing bad sensations seem most likely to work for weaker memory sensations. They do not seem likely for core sensations or for strong memory sensations. Adding sensations seems to always be taking place, so that’s another good candidate. Since added sensations are both core sensations and memory sensations, if I could influence the type and character of the added sensations, or add new ones elsewhere, this would give me a way to influence the most important sensations in my overall value function.

So is it even meaningful to talk about influencing the added sensations? If I have a descriptive view of the world, in which I talk about correlation, do I even have a way to say what influence or actions are?

I certainly have some sensations which are correlated with my perception of intention. For example, muscle movements correlate very highly (though not absolutely, as in the case of touching the hot stove) with the perception of intention. From a statistical perspective, I could take these phenomena, do the simplest thing (take the correlation percentage as the prediction percentage) and come up with a predictive framework. But are the muscle movements and intentions “meaningfully” caused?

To say the word “caused” – means that an antecedent measurement is followed by a subsequent measurement, under some conditions. For example, throwing the ball against the wall will cause the ball to hit the wall, unless your dog jumps up and grabs it, then growls and shakes the ball before handing it back to you. Adding the usual statistical qualifiers of “within known experience” and “correlation of known data, even to 100%, does not metaphysically guarantee future results”, it is possible to represent this construct within a descriptive framework without any trouble at all.

The real issue here is that “influence”, “actions”, “will”, are also associated with the assumption that some “prior state of being”, associated with the human “mind”, is the antecedent. But what it is this “mind”? Go to the dictionary and it says that it’s thoughts, feelings, etc. – which is just restating the original problem. So, saying that “you willed something to happen” is effectively begging the question (meaning it forces you to assume the truth of the proposition, that you can will something, prior to actually addressing the truth of the proposition). We cannot deal with it at that level.

So back to basics. I could brute-force apply the “caused” test to everything in my memory. This results in huge numbers of “caused” events. It also results in huge numbers of failures, where the same phenomena applied in the same conditions do not have the same result. This simplistic “caused” relation itself does not have a very useful application, because it rings up huge numbers of results and fails the rest, without giving any greater assurance of what is causable and what is not. I could tighten it up by applying various tests of statistical robustness, but then I have huge holes (for example what the weather is three weeks from now) and it still isn’t getting me closer to what I really want – how to change sensations that we don’t even have yet (because based on previous history, I think they are going to be added). Therefore, screw the simple, conventional view of causality.

Instead, I’ll try another angle, and merely focus on the method of predicting added sensations. I said earlier that sensations were added all the time. This phenomenon establishes the intuitive suggestion that there is such a thing as the future. Assuming that those new sensations resulted from the future events, and are not purely random, I could use my existing memories to pull out a range of possible futures by applying correlations from the past (or even single instances of paired sensations) to construct the next added sensations in the intuitive time chain. This could then be formed into a probability distribution based on the usual statistical robustness criteria (prediction envelope) and then weighted against my previously outlined value system to produce a utility measurement.

The predictions for the next time quanta would then be based off of the my existing memory, then the newest sensations. It follows from this that using the correlation as the criteria for prediction, that favorable alterations in the future sensations must be either

- Changes in the types of sensations, so as to break the existing correlation patterns

- Changes in the number of sensations, so as to strengthen the existing correlation patterns

- For theoretical completeness, I note the possibility of entirely new types of sensations coming about, which provide additional material for correlation. However, given the low frequency of this occurrence in my memory, and consequent lower probability according to correlation criteria of yielding useful results, I will not focus on this aspect.

Since my prediction methods are based on correlation, and since it is not particularly feasible to modify existing memories (and it is very easy to see in my memory that excluding the existing information makes large swaths of memory less predictable) – (and I haven’t said anything about how to modify existing memories), then I’m mostly (the only possible ambiguities being things I have not yet discussed) relying on sheer luck to introduce new sensation(s) that would tip the predictions to a more favorable probability distribution. Thus I see here that the intuitive idea of causation is useless from the perspective of changing the future (as opposed to explaining and predicting it) since I have to operate outside the previous framework to produce a different prediction.

So what actors are outside our known vocabulary? It could be that I repeatedly forget or am unaware of those influences that cause the changes – but then how could I take them into account when making a prediction? That there are such actors is an interesting and of course non-falsifiable hypothesis, but it doesn’t offer any assistance on whether there are ways to maximize the utility function.

So is there anything at all that could be even altered?

There is one aspect of my consciousness which is easily able to take on different forms – those sensations associated with my thinking and philosophical reflection. It is very easy to see the different ways in which my other sensations can be sorted, compressed, categorized, grouped. etc.

Consider the following possibility (there is of course no proof): if my thinking were itself an actor, then it would be able to add, create, and modify those sensations in its sphere of influence (meaning my reflective thoughts). If there then exists an agent that operates in part on those reflections and produces new sensations, then there would be an effective way to improve my value sensations.

So, from the constructive standpoint (not the theoretically infallible standpoint), I’ve moved the problem at least into an area I can perceive.

At this point I am left with a question, which one could argue has been an unstated assumption from the very beginning of the discussion: is it possible to add/modify/remove thinking sensations? Certainly I perceive that they are modified, and that such activity is quite regular. The patterns certainly suggests orderings to the thoughts – they certainly aren’t totally uncorrelated. However, these thinking sensations are in turn correlated with other sensations. There are very few if any thinking sensations that are truly independent of the pattern or content of other sensations.

I consider this an interesting pattern though: intention, thinking, intention. If I apply the prior discussion, that means that whatever agent causes intention sensations could influence thinking sensations, which in turn would result in the intention sensations that correlate with me actually doing something. In turn, thinking sometimes produces intentions to think. I think this pattern is really what I perceive as my “will” although of course that term is very loaded with meanings that I do not intend to suggest.

In the end, although I can certainly see how thinking sensations might influence actions, I don’t immediately see how thinking sensations can be freely altered, and how this can actually be known.

I should consider also, this question of “freely altering”. It is easy to characterize a particular set of actions or sensations as being consistent with optimization of something, such as a value function. However, these sensations could be entirely correlated (and predicted, if correlation is used as the basis for a prediction) with precursors. There could also be other knowledge processes that could predict with 100% accuracy. In this context, there would be no evidence for a “free” choice, whether that possibility exists or not. Further, if the sensations were entirely consistent with an optimization for my value function, then a freely operating optimizer would not be distinguishable from a fully deterministic process. Therefore, the evidence for a presence capable of freely altering sensations has to at least be partially uncorrelated with fully deterministic/100% correlated processes.

However, an optimized choice, strategy, or other output is fully deterministic in terms of the precursors; to say that particular sets of sensations create a maximal effect on my value function, means that out of all possible sensation sets, I have selected only a few, and that because such selection is based upon the evaluation in my value function of the predicted outcomes of the sensation set. If meaningful prediction is impossible, or my value function cannot be predicted at points in time, I wouldn’t even know what sensation sets are “optimizing”. If a perceptible freely altering process is not 100% deterministic, and therefore not like an optimizer, then this suggests that a perceptible freely altering process is highly unlikely to consistently match up with the output of an optimizer.

Based on my memory, I do perceive that my perception of what is optimal behavior, and what I have done in certain situations, are not always the same thing. I think that this disconnect is a great source of confusion in the idea of “free will”, “freely altering process”, and so forth, in regards to being able to predict my own behavior, and also in the alignment of my value function and my perception of optimal behavior with what I actually perceive myself to be doing. If what I am doing does not necessarily match what I think is the optimal sensations/choice, then whatever freely altering process(es?) are occurring are not always bound by any restrictions that might exist as a result of my evaluation of sensations/optimization under a utility function. Therefore, it seems at least that there is some aspect of my behavior which cannot be considered as optimizing in terms of my value function.

Further, I recall that, at certain times, my sense of what was optimal at that time, does not match the conclusions of what I consider to be optimal now. This is sometimes true even when I consider the same evidence. This is sometimes true even when I consider the situation and try, as much as I can, to evaluate it using the same value function which I was applying at the time. This, then, indicates error characteristics of my optimization abilities as well, further indicating the fallibility of my ability to successfully optimize situations, even if whatever freely altering function did conform to the recommendations suggested by my optimizer.

Certainly these two instances are consistent with a significant amount of non-deterministic activity, relative to my conscious attempts to comprehend and optimize the situation. Comparing these deviations from the perceived optimal at the time, I perceive that there is a wide gulf (50%? 60%?) between the recommended actions and the actual actions. This is certainly not a well-regulated system – and I know that a large number of other people (e.g. alcohol addicts) struggle with percentages even worse than this.

This leaves maybe 40% of possible freely altered sensations to be the doings of my consciously known optimizer – and as noted before, the evidence for the influence of such an optimizer would be precursors (situation) and reaction to that situation correlated at under 100%, but for which the subsequent actions correlate with the recommendations of the optimizer.

I also recall that my value equation changes with time, and according to certain events. For example, after eating, eating more food has a zero or negative net value, but in 10 hours after eating, this will again have a positive value. To try and keep the knowledge construct simple, I will refer to my instantaneous value equation to refer to the output of my value function at that one specific time period.

If my optimizer reliably/deterministically identifies at least one sensation set that optimizes my instantaneous value equation in that situation, and my instantaneous value equation is defined in terms of value for each universe of possible sensation sets, then this means my optimizer is mapping the situation to at least one recommended output sensation set for that instantaneous value equation. If I then anticipated the onset of the future, and anticipated which situations I might be in, I could then match up the appropriate situational responses for each instantaneous value equation that I could be using. By doing this, the optimal responses can be wholly characterized, and therefore the actions of an optimizer can be evaluated as accurate based on their conformance to this mapping.

As part of the input into this equation, the variation in the output of my value equation (each instantaneous value equation in sequence) is also a factor, as each subsequent situation is again evaluated. This is equivalent to computing the full value equation at the one point in time, with the input of the future situations as the output.

However, the accuracy of such a computation rapidly decreases for future time intervals, because the randomness/uncertainty/error stemming from the imperfect correlations that constitute my knowledge base of sensations makes it impossible to link even the past sensations together with perfect accuracy – and it is therefore impossible to predict the future sensations with 100% accuracy. This in turn implies a limit on any consciously operating optimizer, which can only use my conscious knowledge base. Even so, the optimizer can still try to optimize according to one of the techniques that seems to produce one of the optimal results over time.

However, the instantaneous value equation also vary based on the preceding situations (for example whether I managed to get a bite to eat or not), so there is also error in the evaluation of the long-term value equation that has to be contended with. This makes it a lot more difficult, because both the future situations and future instantaneous value equations are now clouded by prediction errors. This opens the possibility of feedback cycles, where bad estimations of value equation lead to bad reactions, which lead to bad situations, which are again estimated incorrectly, leading to bad reactions…Therefore, the scope of the optimizer is even more limited as a result of this additional source of prediction error.

When estimating the future value equation, the optimizer must also predict the succession of value equations. However, I recall that my instantaneous equation has changed many times, and is not often exactly the same at any given point in time. This makes the job of finding correlations harder. If the instantaneous value equation is split apart via statistical analysis into sub-components that may be evaluated independently, more correlations can be formed, leading to better prediction accuracy. However, this is still a very volatile process.

This all means that the optimizer, which is operating on current consciousness data, is making its decisions in that context. Therefore, to compare the performance of the optimizer across different times to form some sort of meaningful correlation, the old contexts have to be available in memory. Since I forget most of my conscious sensations, this means that the comparison of old optimizer decisions is being made using at least largely incomplete data (if not outright bogus data).

The ultimate utility of the optimizer is measured by how often it really does end up making a decision that, with the benefit of hindsight and the actual values, is optimal (or more optimal than would have been possible without it). Therefore, I look back over the decisions I have made in my life, to understand whether they worked out well, within the limits noted above. If they worked out miserably, then whether there is a “freely altering will” is maybe an interesting question, but not really that helpful until the optimizer can get fixed somehow.

The decisions I have made can’t have been that bad – I have a great job, in a great country, I have my health, and am in a safe situation. Many others in my general situation, or in more favorable situations, have not been able to duplicate these results.

Then I have to ask whether the decisions that were made at each time, were, in fact, predictable, and with what error, based on similar individuals in similar situations. To spare a great deal of explanation, I submit to you that most of my life has been on autopilot, and so while each individual choice has a significant amount of error associated with it, in aggregate, the type of person I have become is a common one in the probability distribution, given the situations I have been in. If I add sensation data like my own feelings, I believe the prediction accuracy could be very high if every measurable piece of data were taken into consideration.

Therefore, this doesn’t provide much hope for accuracy in distinguishing the operation of the optimizer, or of any “freely altering” process. The course of events follows a predictable pattern, and is favorable, so the optimizer and associated “freely altering” process would not necessarily deviate from this course.

I would therefore conclude that it is impossible for me, with my experience, to distinguish the true operation of such forces within myself. By the same facts, it cannot be ruled out as a possibility – and, because it is a constructive hypothesis, it is not an error to behave or think as though it exists, even though, noting all the factors listed above, even a “free will” has many deterministic limitations placed on its operation. The alternative of true determinism is, by weight of the correlations and facts noted above, more likely; however, as it does not afford an opportunity to improve my own happiness, this possibility has to be weighted in predictions as only one possibility (albeit a highly likely one).

To restate: based on my own perception and memory, as well as the logical principles involved, even if the value-maximization hypothesis is assumed, its exact mode of operation is not possible to discern with any metaphysical certainty. Therefore, if in fact the value-maximization hypothesis is constructive, additional assumptions are needed to characterize its operation, so that knowledge processes can operate in order to realize value-maximization.